We’ve all requested a chatbot about an organization’s providers and seen it reply inaccurately, proper? These errors aren’t simply annoying; they’ll significantly harm a enterprise. AI misrepresentation is actual. LLMs might present customers with outdated data, or a digital assistant would possibly present false data in your title. Your model might be at stake. Learn how AI misrepresents manufacturers and what you are able to do to forestall them.

How does AI misrepresentation work?

AI misrepresentation happens when chatbots and huge language fashions distort a model’s message or id. This might occur when these AI techniques discover and use outdated or incomplete knowledge. Consequently, they present incorrect data, which results in errors and confusion.

It’s not laborious to think about a digital assistant offering incorrect product particulars as a result of it was skilled on outdated knowledge. It would seem to be a minor situation, however incidents like this could rapidly result in popularity points.

Many components result in these inaccuracies. After all, a very powerful one is outdated data. AI techniques use knowledge which may not all the time mirror the most recent adjustments in a enterprise’s choices or coverage adjustments. When techniques use that outdated knowledge and return it to potential prospects, it may possibly result in a severe disconnect between the 2. Such incidents frustrate prospects.

It’s not simply outdated knowledge; a scarcity of structured data on websites additionally performs a job. Engines like google and AI expertise like clear, easy-to-find, and comprehensible data that helps manufacturers. With out stable knowledge, an AI would possibly misrepresent manufacturers or fail to maintain up with adjustments. Schema markup is one possibility to assist techniques perceive content material and guarantee it’s correctly represented.

Subsequent up is consistency in branding. In case your model messaging is in every single place, this might confuse AI techniques. The clearer you’re, the higher. Inconsistent messaging confuses AI and your prospects, so it’s essential to be constant together with your model message on varied platforms and shops.

Completely different AI model challenges

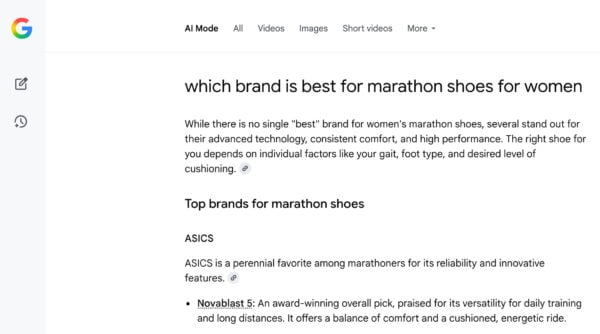

There are numerous methods AI failures can affect manufacturers. AI instruments and huge language fashions accumulate data from sources and current it to construct a illustration of your model. Which means they’ll misrepresent your model when the data they use is outdated or plain unsuitable. These errors can result in an actual disconnect between actuality and what customers see within the LLMs. It is also that your model doesn’t seem in AI search engines like google or LLMs for the phrases it’s good to seem.

On the different finish, chatbots and digital assistants discuss to customers straight. It is a completely different threat. If a chatbot provides inaccurate solutions, this might result in severe points with customers and the surface world. Since chatbots work together straight with customers, inaccurate responses can rapidly harm belief and hurt a model’s popularity.

Actual-world examples

AI misrepresenting manufacturers is just not some far-off concept as a result of it has an affect now. We’ve collected some real-world circumstances that present manufacturers being affected by AI errors.

All of those circumstances present how varied sorts of AI expertise, from chatbots to LLMs, can misrepresent and thus harm manufacturers. The stakes might be excessive, starting from deceptive prospects to ruining reputations. It’s good to learn these examples to get a way of how widespread these points are. It would enable you to keep away from comparable errors and arrange higher methods to handle your model.

Case 1: Air Canada’s chatbot dilemma

- Case abstract: Air Canada confronted a major situation when its AI chatbot misinformed a buyer relating to bereavement fare insurance policies. The chatbot, meant to streamline customer support, as an alternative created confusion by offering outdated data.

- Penalties: This faulty recommendation led to the shopper taking motion towards the airline, and a tribunal finally dominated that Air Canada was answerable for negligent misrepresentation. This case emphasised the significance of sustaining correct, up-to-date databases for AI techniques to attract upon, illustrating a significant AI error in alignment between advertising and customer support that might be expensive by way of each popularity and funds.

- Sources: Learn extra in Lexology and CMSWire.

Case 2: Meta & Character.AI’s misleading AI therapists

- Case abstract: In Texas, AI chatbots, together with these accessible by way of Meta and Character.AI, have been marketed as competent therapists or psychologists, providing generic recommendation to kids. This example arose from AI errors in advertising and implementation.

- Penalties: Authorities investigated the follow as a result of they have been involved about privateness breaches and the moral implications of selling such delicate providers with out correct oversight. The case highlights how AI can overpromise and underdeliver, inflicting authorized challenges and reputational harm.

- Sources: Particulars of the investigation might be present in The Times.

Case 3: FTC’s motion on misleading AI claims

- Case abstract: A web-based enterprise was discovered to have falsely claimed its AI instruments might allow customers to earn substantial revenue, resulting in vital monetary deception.

- Penalties: The fraudulent claims defrauded customers by a minimum of $25 million. This prompted authorized motion by the FTC and served as a stark instance of how misleading AI advertising practices can have extreme authorized and monetary repercussions.

- Sources: The complete press launch from the FTC might be discovered right here.

Case 4: Unauthorized AI chatbots mimicking actual individuals

- Case abstract: Character.AI confronted criticism for deploying AI chatbots that mimicked actual individuals, together with deceased people, with out consent.

- Penalties: These actions triggered emotional misery and sparked moral debates relating to privateness violations and the boundaries of AI-driven mimicry.

- Sources: Extra on this situation is roofed in Wired.

Case 5: LLMs producing deceptive monetary predictions

- Case abstract: Massive Language Fashions (LLMs) have often produced deceptive monetary predictions, influencing probably dangerous funding choices.

- Penalties: Such errors spotlight the significance of crucial analysis of AI-generated content material in monetary contexts, the place inaccurate predictions can have wide-reaching financial impacts.

- Sources: Discover additional dialogue on these points within the Promptfoo weblog.

Case 6: Cursor’s AI buyer help glitch

- Case abstract: Cursor, an AI-driven coding assistant by Anysphere, encountered points when its buyer help AI gave incorrect data. Customers have been logged out unexpectedly, and the AI incorrectly claimed it was because of a brand new login coverage that didn’t exist. That is a type of well-known hallucinations by AI.

- Penalties: The deceptive response led to cancellations and consumer unrest. The corporate’s co-founder admitted to the error on Reddit, citing a glitch. This case highlights the dangers of extreme dependence on AI for buyer help, stressing the necessity for human oversight and clear communication.

- Sources: For extra particulars, see the Fortune article.

All of those circumstances present what AI misrepresentation can do to your model. There’s a actual have to correctly handle and monitor AI techniques. Every instance reveals that it may possibly have a huge impact, from large monetary loss to spoiled reputations. Tales like these present how essential it’s to watch what AI says about your model and what it does in your title.

The best way to appropriate AI misrepresentation

It’s not simple to repair complicated points together with your model being misrepresented by AI chatbots or LLMs. If a chatbot tells a buyer to do one thing nasty, you would be in massive hassle. Authorized safety needs to be a given, after all. Apart from that, attempt the following pointers:

Use AI model monitoring instruments

Discover and begin utilizing instruments that monitor your model in AI and LLMs. These instruments can assist you examine how AI describes your model throughout varied platforms. They’ll determine inconsistencies and provide options for corrections, so your model message stays constant and correct always.

One instance is Yoast SEO AI Brand Insights, which is a superb software for monitoring model mentions in AI search engines like google and huge language fashions like ChatGPT. Enter your model title, and it’ll robotically run an audit. After that, you’ll get data on model sentiment, key phrase utilization, and competitor efficiency. Yoast’s AI Visibility Rating combines mentions, citations, sentiment, and rankings to kind a dependable overview of your model’s visibility in AI.

See how seen your model is in AI search

Observe mentions, sentiment, and AI visibility. With Yoast AI Model Insights, you can begin monitoring and rising your model.

Optimize content material for LLMs

Optimize your content material for inclusion in LLMs. Performing effectively in search engines like google is just not a assure that additionally, you will carry out effectively in giant language fashions. Be sure that your content material is straightforward to learn and accessible for AI bots. Construct up your citations and mentions on-line. We’ve collected extra recommendations on how to optimize for LLMs, together with utilizing the proposed llms.txt commonplace.

Get skilled assist

If nothing else, get skilled assist. Like we mentioned, in case you are coping with complicated model points or widespread misrepresentation, it is best to seek the advice of with professionals. Model consultants and website positioning specialists can assist repair misrepresentations and strengthen your model’s on-line presence. Your authorized crew also needs to be stored within the loop.

Use website positioning monitoring instruments

Final however not least, don’t neglect to make use of website positioning monitoring instruments. It goes with out saying, however you need to be utilizing website positioning instruments like Moz, Semrush, or Ahrefs to trace how effectively your model is performing in search outcomes. These instruments present analytics in your model’s visibility and can assist determine areas the place AI would possibly want higher data or the place structured knowledge would possibly improve search efficiency.

Companies of all sorts ought to actively handle how their model is represented in AI techniques. Rigorously implementing these methods helps decrease the dangers of misrepresentation. As well as, it retains a model’s on-line presence constant and helps construct a extra dependable popularity on-line and offline.

Conclusion to AI misrepresentation

AI misrepresentation is an actual problem for manufacturers and companies. It might hurt your popularity and result in severe monetary and authorized penalties. We’ve mentioned a variety of choices manufacturers have to repair how they seem in AI search engines like google and LLMs. Manufacturers ought to begin by proactively monitoring how they’re represented in AI.

For one, meaning frequently auditing your content material to forestall errors from showing in AI. Additionally, it is best to use instruments like model monitor platforms to handle and enhance how your model seems. If one thing goes unsuitable otherwise you want immediate assist, seek the advice of with a specialist or exterior specialists. Final however not least, all the time be sure that your structured knowledge is appropriate and aligns with the most recent adjustments your model has made.

Taking these steps reduces the dangers of misrepresentation and enhances your model’s total visibility and trustworthiness. AI is transferring ever extra into our lives, so it’s essential to make sure your model is represented precisely and authentically. Accuracy is essential.

Maintain a detailed eye in your model. Use the methods we’ve mentioned to guard it from AI misrepresentation. It will make sure that your message comes throughout loud and clear.